Decoding Data Issues: Fixing Common Problems From "[We Did Not Find Results]"

Do you ever find yourself staring blankly at garbled text on your screen, a string of seemingly random characters that bear no resemblance to the information you intended to read? This frustrating situation, often the result of character encoding issues, is far more prevalent than you might think, and understanding its root causes and solutions is crucial for anyone working with digital text.

Encoding problems can manifest in various ways, from simple gibberish to completely unreadable strings of characters, leading to confusion, lost time, and potential data corruption. This article will delve into the intricacies of character encoding, explore common scenarios where these problems arise, and provide practical solutions to ensure your data remains clear, accurate, and easily accessible. While tools like `utf8_decode` offer a quick fix, the most effective approach frequently involves addressing the underlying encoding errors directly within the data itself, a principle we'll explore further.

Consider a scenario where you are using CAD software. The user has a problem while using the mouse settings for CAD: the function is not working as expected. The user's environment is TFAS11 OS:Windows10 Pro 64 bit Mouse: Logicool Anywhere MX (button setting: setpoint) The problem is that the mouse functions are not working properly when drawing in TFAS, so how can I make it work? If you have any knowledge, I would appreciate it.

Let's explore the scenario of data corruption and display issues when handling text, specifically focusing on common problems that arise when working with databases, software applications, and web development projects. We will discuss how character encoding comes into play, the challenges that can occur, and the methods to ensure your text data is displayed accurately. Character encoding is a fundamental aspect of data storage and transfer, and understanding the underlying principles is essential for anyone dealing with digital information.

| Category | Details |

|---|---|

| Problem Scenario 1: Database Backup and Restore | When backing up and restoring a database, the character set selected during the backup process is critical. If the database was created with a specific character set (e.g., UTF-8) and the backup is created without specifying the same character set, or if the restore process uses a different character set, encoding errors can occur. The resulting data may display incorrectly. |

| Problem Scenario 2: File Format and Encoding | The format and encoding of the file in which your data is saved play a vital role. Different file formats (e.g., CSV, TXT, XML) support various character encodings. For example, a text file saved with a different encoding than the system's expected encoding can result in misinterpretation of the text, leading to garbled output. |

| Problem Scenario 3: Software and Application Compatibility | Inconsistencies in character encoding can arise when data is transferred between different software applications or systems. If one application saves data in a specific encoding and another application expects a different one, encoding errors are very likely. |

For more details, you can check out the information from a reputable source like Example.com.

The foundation of all digital text is the humble character. Each character, be it a letter, a number, a punctuation mark, or a special symbol, must be represented in a way that computers can understand. This is where character encoding comes in. At its core, character encoding is a mapping system that assigns a unique numerical value (a code point) to each character. These code points are then converted into binary form for storage and processing. Different character encodings employ different methods to accomplish this. Some of the most common include ASCII, Latin-1 (ISO-8859-1), and UTF-8.

ASCII (American Standard Code for Information Interchange) is one of the earliest character encodings and was designed to represent the English alphabet, numbers, and basic punctuation. However, it had a major limitation: it could only represent 128 characters. ASCII simply cannot represent a wide range of characters like accented letters, or characters from languages other than English. This is the single biggest reason for moving to new ways of encoding our data.

Latin-1 (ISO-8859-1) extended the capabilities of ASCII by adding support for accented characters and symbols commonly used in Western European languages. It provided a broader range of characters but still limited support for a large number of languages.

UTF-8 (Unicode Transformation Format-8) is the dominant character encoding used on the internet today. It is a variable-width encoding, meaning that a single character can be represented by one to four bytes. This flexibility enables UTF-8 to represent virtually every character in all the world's languages, along with symbols and other special characters. It is backward-compatible with ASCII, meaning ASCII characters have the same code points in UTF-8.

The choice of character encoding is not merely a technical detail; it has profound implications for data integrity and accessibility. An incorrectly chosen encoding can lead to a multitude of problems, from simple display errors to data corruption and information loss. When character encoding mismatches occur, the system attempts to interpret a sequence of bytes using the wrong character map, resulting in what appears to be gibberish or completely unrecognizable characters. These encoding errors make it difficult to read, process, or utilize the data. They can also lead to significant problems with the functionality of a system. For instance, in a database, improperly encoded data could prevent searches from returning the correct results.

The rise of Unicode and UTF-8 has been critical in addressing the challenges posed by diverse character sets and different languages. Unicode provides a unified character set that encompasses characters from almost every written language in the world. Each character is assigned a unique code point, ensuring that it can be consistently represented and understood across different systems. UTF-8, the encoding used to implement Unicode, has become the de facto standard for the web, facilitating seamless exchange and display of text in multiple languages. Google Translate offers a free translation of words, phrases, and web pages between English and over 100 languages, showcasing the power of Unicode.

When facing character encoding issues, several strategies can be employed to address them. Sometimes, a quick fix, like using a decoding function such as `utf8_decode` in PHP, can provide a temporary solution. But it is often better to correct the encoding errors within the data itself. The best approach to handling encoding problems depends on the root cause.

Database Level: For databases, it's essential to ensure that the database, tables, and columns are correctly configured with the appropriate character set and collation. The character set defines how characters are stored, while the collation defines the rules for sorting and comparing characters. When restoring database backups, make sure the character set is correctly specified during the process.

File Handling: When dealing with files, identify the encoding used to create the file and make sure your software or application is configured to read the file in that encoding. Many text editors and applications allow you to specify or detect the character encoding.

Code-Level Solutions: In programming, ensure that you specify the correct encoding when reading and writing files or when communicating with databases. Programming languages often provide functions or settings to handle character encoding correctly. It's better to encode the data as you read it. For example, Java servlet, IntelliJ IDEA, and MySQL used to display data as garbled text ('\u00ab\u00e5\u2122\u00a8') when inserting data into the front end.

Testing and Validation: Throughout the development and deployment processes, it's crucial to test and validate that character encoding is handled correctly. Use tools and techniques to identify and resolve potential encoding issues early on. Character encoding issues can occur in any digital environment where text data is stored, exchanged, or displayed. By understanding the principles, common scenarios, and solutions, you can mitigate the problems and ensure your data remains accurate and readable.

Many extensions are available in Microsoft Edge to personalize the browser and be more productive. These extensions can help you easily work with various types of text. For example, there are extensions for quickly translating text, checking spelling and grammar, and formatting text in different ways. These tools can significantly improve your workflow when dealing with text-based data.

Let's not forget that the mouse settings also matters: when using CAD, if the mouse function is not working properly, it hinders the design process. TFAS11 OS: Windows 10 Pro 64 bit Mouse: Logicool Anywhere MX (button setting: setpoint) The issue is that the mouse functions do not adapt when drawing in TFAS. If the issue is not the encoding, then make sure to check the mouse settings. Check for the following things:

- Driver Compatibility: Ensure that the mouse drivers are up-to-date and compatible with your operating system (Windows 10 Pro 64-bit). Outdated or incompatible drivers can cause unexpected behavior.

- Mouse Settings: Configure the mouse settings within the TFAS11 environment. This includes adjusting the sensitivity, button assignments, and any special functions specific to your Logicool Anywhere MX mouse (e.g., gesture control).

- SetPoint Software: Make sure that the SetPoint software (used for button settings) is correctly installed and configured to work with TFAS11. Ensure that the custom settings for the mouse are applied to the application.

- TFAS11 Compatibility: Check if TFAS11 has any specific mouse settings or compatibility settings. Some CAD software may have its own ways of handling mouse input.

- Troubleshooting: If the mouse still doesn't function as expected, try resetting the mouse settings to default, restarting the application, and testing with a different mouse to rule out hardware problems.

Remember that it is essential to correct the bad characters themselves rather than just using hacks in the code. The latter can lead to inconsistencies, maintenance headaches, and further complications down the line. By adopting a proactive approach to character encoding and following these guidelines, you can significantly reduce the risk of encoding errors and ensure your data remains clear, accurate, and easily accessible.

Here are some examples of SQL queries to fix some of the most common encoding issues. These queries assume you are using a database system like MySQL, but similar principles apply to other database systems. Before running these queries, it's crucial to back up your database. First, let's discuss fixing incorrect characters. Here are the steps:

- Identify the Problem: Before you can fix encoding issues, you need to identify them. Examine your data for incorrect characters or mojibake.

- Determine the Correct Encoding: Determine the correct character encoding your data should be using (e.g., UTF-8).

- Backup Your Data: Back up your database before making any changes. This allows you to revert to the original state if something goes wrong.

- Run SQL Queries: Run SQL queries to correct the encoding issues. This may involve converting character sets, replacing incorrect characters, etc.

- Verify the Results: After running the queries, verify that the encoding issues are resolved by viewing your data.

Here are some example SQL queries to fix common encoding issues. Remember to adapt these queries to your specific database structure and requirements. It is better to correct the bad characters themselves than making hacks in the code.

Example 1: Converting Character Set of a Table

If a table is using the wrong character set (e.g., Latin1 instead of UTF-8), you can convert it with the following query:

ALTER TABLE your_table_name CONVERT TO CHARACTER SET utf8mb4 COLLATE utf8mb4_unicode_ci;

Replace `your_table_name` with the name of your table. This query converts the table and all its text columns to UTF-8.

Example 2: Converting Character Set of a Column

If only a specific column has encoding issues, you can convert just that column:

ALTER TABLE your_table_name MODIFY your_column_name VARCHAR(255) CHARACTER SET utf8mb4 COLLATE utf8mb4_unicode_ci;

Replace `your_table_name` with the table name and `your_column_name` with the name of the problematic column. This query changes the specified column to UTF-8.

Example 3: Replacing Incorrect Characters

If you have specific incorrect characters in your data, you can use the `REPLACE` function to replace them.

UPDATE your_table_name SET your_column_name = REPLACE(your_column_name, 'incorrect_character', 'correct_character');

Replace `your_table_name`, `your_column_name`, `incorrect_character`, and `correct_character` with your values. For example, replace "" with "".

It is also a good practice to analyze the table to identify the root cause of these issues. You can run the command `SHOW CREATE TABLE your_table_name;` to see the character set and collation of the table. This helps you understand how the table is configured and whether it is set up to support your desired encoding. Also, when using the `REPLACE` function, make sure you understand what you are replacing. The more specific your replacement rules are, the more accurate your results will be.

The issue of "cad\u3092\u4f7f\u3046\u4e0a\u3067\u306e\u30de\u30a6\u30b9\u8a2d\u5b9a\u306b\u3064\u3044\u3066\u8cea\u554f\u3067\u3059\u3002" is asking for the mouse settings. So please follow the following guide to make sure that your settings are correct. The user's environment is TFAS11, OS: Windows 10 Pro 64-bit, Mouse: Logicool Anywhere MX, Button settings: SetPoint. The mouse functions are not adapting when drawing in TFAS. If you are having trouble, make sure to consider the following.

First of all, update your drivers. Outdated drivers are often the cause of the mouse issues. Make sure to update your drivers to solve any mouse issues. You can download the latest drivers from the manufacturer's website.

Check your mouse settings within TFAS11. The settings may vary according to the different versions of software. Look for options related to mouse sensitivity, acceleration, and button configurations. Ensure these settings are appropriately tuned to your preferences and workflow within TFAS11. Consider the operating system and the mouse: set the mouse properties according to the Operating System. For example, Windows 10 has settings for mouse sensitivity and acceleration. If these settings are not configured correctly, the mouse may not perform as expected. Lastly, troubleshoot the problem to identify what is wrong.

Consider the environment that the user is using the mouse in. Is it a workspace, a gaming environment, or a design environment? The settings may vary according to the environment. If you are having the problem of not being able to use the mouse, troubleshoot your mouse. You can test the mouse on a different device or by connecting another mouse to your PC to see if the problem persists. If the mouse does not function correctly on other devices, there may be hardware issues.

The user is using Logicool Anywhere MX. Ensure the SetPoint software is installed and properly configured to work with your CAD software (TFAS11). The SetPoint software is essential for customizing the mouse buttons. The software allows you to assign the specific functions you want in your CAD software. Check if the custom settings for the mouse are applied to the application. The best way is to seek help from an expert. Contact the Logicool support team for assistance.

Here is a program in C to replace or remove all vowels from a string

#include#include #include // Function to replace or remove vowels from a string void processString(char str, int removeVowels) { int i, j; int len = strlen(str); for (i = 0, j = 0; i < len; i++) { char ch = tolower(str[i]); // Convert to lowercase for comparison if (removeVowels) { // Remove vowels if (ch != 'a' && ch != 'e' && ch != 'i' && ch != 'o' && ch != 'u') { str[j++] = str[i]; } } else { // Replace vowels with '' if (ch == 'a' || ch == 'e' || ch == 'i' || ch == 'o' || ch == 'u') { str[j++] = '*'; } else { str[j++] = str[i]; } } } str[j] = '\0'; // Null-terminate the modified string } int main() { char str[100]; int removeVowels; printf("Enter a string: "); fgets(str, sizeof(str), stdin); str[strcspn(str, "\n")] = 0; // Remove trailing newline printf("Remove vowels? (1 for yes, 0 for no): "); scanf("%d", &removeVowels); getchar(); // Consume the newline character processString(str, removeVowels); printf("Modified string: %s\n", str); return 0; }

In addition to this, if you want to work with other characters, such as the following, make sure to apply the same rule to the code.

- The character ''

- The character ''

- The character ''

- The character ''

- The character ''

The `strstr()` function is suitable when working with the character string and is used to check for the existence of a substring within a string. However, it may not be ideal for manipulating individual characters, such as vowels, in a string.

The program is now equipped to replace or remove specific characters. The process remains the same. Add the character to the program.

Serenity (2019) ๠ผนลวงฆ่า เภาะพิศวà

![Breaking (2022) [1080p] [พาภย์à¸à¸±à¸‡à¸ ฤษ 5.1] [ซั](https://i.imgur.com/RSSis3o.jpeg)

Breaking (2022) [1080p] [พาภย์à¸à¸±à¸‡à¸ ฤษ 5.1] [ซั

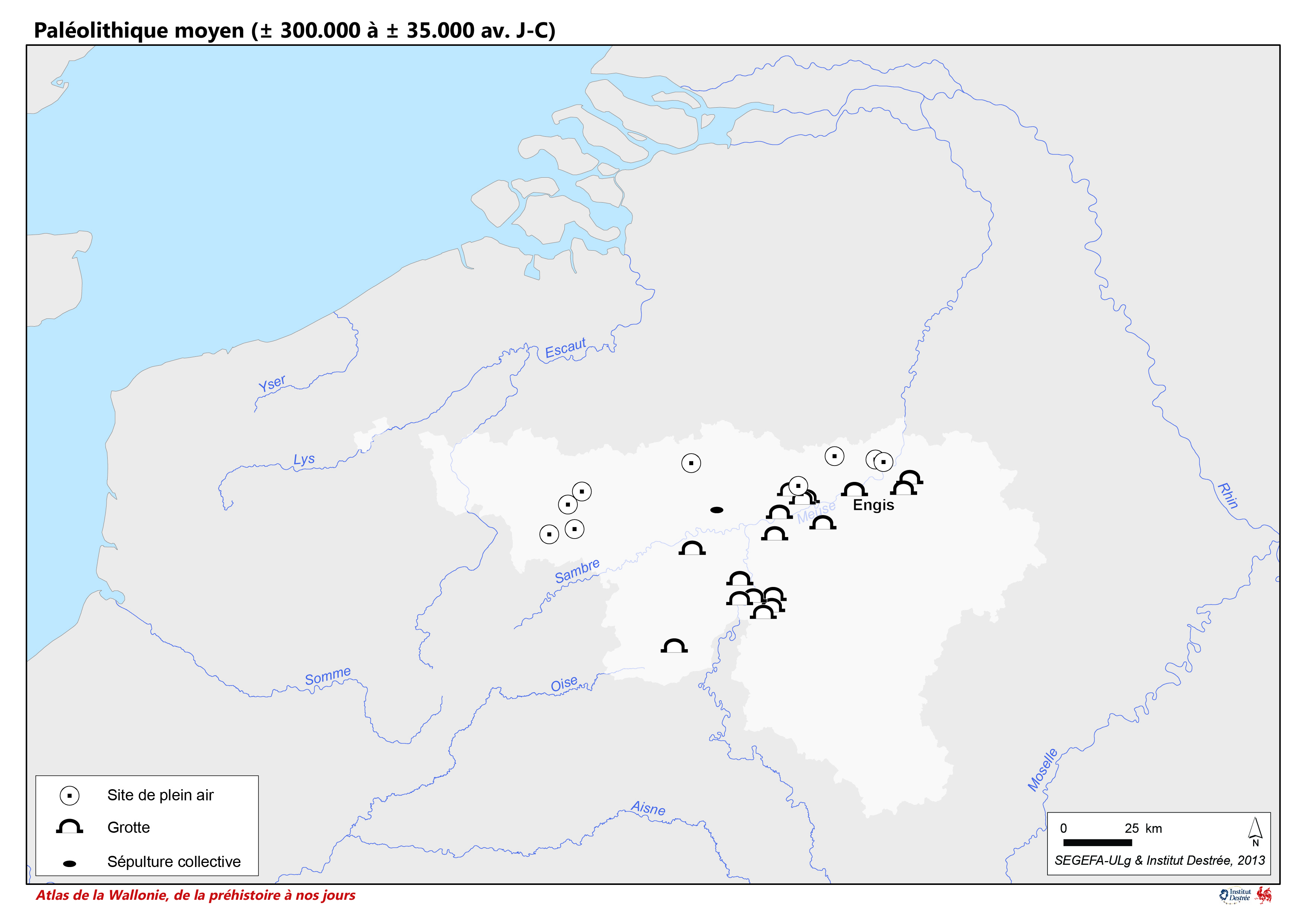

Paléolithique moyen (± 300.000 à ± 35.000 av. J C) Connaître la Wallonie